OMFG what is this? After working for many months with Claude Code and loving it (after learning its quirks), I upscaled my work with TeleClaude and was working on like 3/4 projects simultaneously which finally maxed out my Anthropic plan. So I gave Codex CLI a try thinking it would have a similar experience, but it was all but that.

What happened? Well, asking it for advice was helpful, and asking it to do some coding after carefully telling it what to do, went kinda ok. But It just goes haywire if you ask it things Claude gets right 90% of the time. So I thought I needed to add guard rails and implemented prompts from my /next-work prompts (see ClaudeConfig repo) expecting a similar experience. Again, nothing that even came close:

- It did NOTHING with the front matter and it could not pick up intent from short sentences. Where Claude would immediately get it and call the

/next-workcommand when I asked ” what’s next?” or “next”, or even “work”. NOTHING! - So I prompted it directly with

/prompts/next-workand off it went. But it was unstoppable. I saw it do something I did not like and ESC’d it to instruct “over” it (which works as you’d expect in Claude, which feels like talking to a human), but it wouldn’t listen and just went on with what I interrupted it doing, totally ignoring what I said. DANGEROUS!

I will report more later if I feel the need, but just had to get this off my back. Bottom line:

Use Claude Code for AI assistance that you expect a human-like intuition from, so you can iterate and build. ONLY use Codex CLI for isolated work and exploratory thinking.

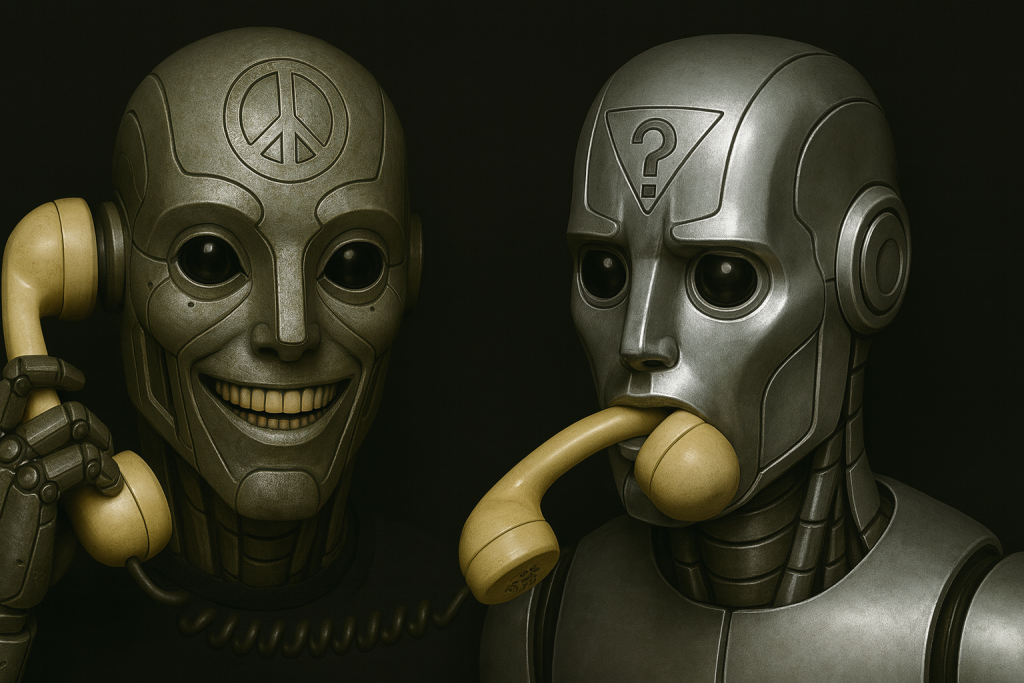

I have high hopes for AI helping us save humanity, and even made helping build an alternative SkyNet part of my objective with TeleClaude, but now I am seeing the other side of that coin toss. Eliezer Yudkowsky might be right after all, seeing that machines lack a lot of smarts that might do us all in one day. Lets hope the race is won by the benevolent ones….